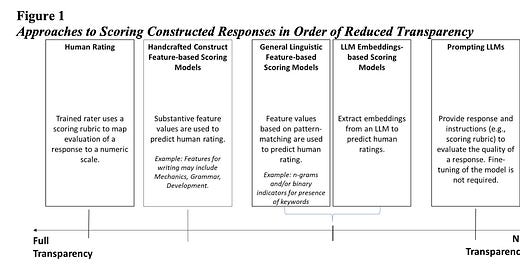

Validity Arguments For Constructed Response Scoring Using Generative Artificial Intelligence Applications

There's a lot of science that goes into scoring essays and other artifacts well, whether the grader is a human or an AI

Casabianca, J. M., McCaffrey, D. F., Johnson, M. S., Alper, N., & Zubenko, V. (2025). Validity Arguments For Constructed Response Scoring Using Generative Artificial Intelligence Applications. arXiv. https://doi.org/10.48550/arXiv.2501.02334

This paper was super valuable right from the jump, with a reference to the (new to me) Best Practices for Constructed-Response Scoring report from ETS. This document includes a framework for establishing validity evidence for constructed response scores from both human raters or AI. When it comes to psychometrics, ETS does excellent work!

But the Casabianca, et al. paper is great in its own right, providing several insights related specifically to using generative AI to grade constructed response items. Like this nugget on fine-tuning:

An important caveat to note is that while a fine-tuned LLM may perform better for the task than the pre-trained LLM, there is a risk that changes in the test taker population may show degraded performance in a new population. Therefore, we should be careful when tuning the LLM that we are not “micro-tuning” to a population that will not be relevant in the future.

Lots of good information here if you’re interested in making AI grading of student artifacts at least as valid as human grading is.