Generative AI in Education: From Foundational Insights to the Socratic Playground for Learning

Learning from the lessons of the past to make effective AI tutors today

Hu, X., Xu, S., Tong, R., & Graesser, A. (2025). Generative AI in Education: From Foundational Insights to the Socratic Playground for Learning (No. arXiv:2501.06682). arXiv. https://doi.org/10.48550/arXiv.2501.06682

You’d be hard-pressed to find a group of authors that know more about computer-based tutoring or “intelligent tutoring systems.” (I’ve been a fan of Graesser for decades.) This article is excellent for the way it reviews the historical work on ITS and its shortcomings, the amount of pedagogical depth it gets into while discussing how LLMs can help overcome those historical issues, and the detailed json prompts provided in the Appendix.

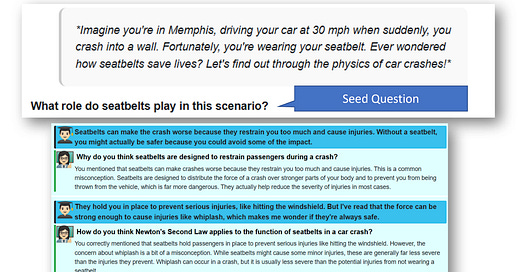

This paper explores the synergy between human cognition and Large Language Models (LLMs), highlighting how generative AI can drive personalized learning at scale. We discuss parallels between LLMs and human cognition, emphasizing both the promise and new perspectives on integrating AI systems into education. After examining challenges in aligning technology with pedagogy, we review AutoTutor—one of the earliest Intelligent Tutoring Systems (ITS)—and detail its successes, limitations, and unfulfilled aspirations. We then introduce the Socratic Playground, a next-generation ITS that uses advanced transformer-based models to overcome AutoTutor’s constraints and provide personalized, adaptive tutoring. To illustrate its evolving capabilities, we present a JSON-based tutoring prompt that systematically guides learner reflection while tracking misconceptions. Throughout, we underscore the importance of placing pedagogy at the forefront, ensuring that technology’s power is harnessed to enhance teaching and learning rather than overshadow it.